CEVR: Learning Continuous Exposure Value Representations for Single-Image HDR Reconstruction ICCV 2023

- Su-Kai Chen NYCU

- Hung-Lin Yen NYCU

- Yu-Lun Liu NYCU

- Min-Hung Chen NVIDIA

- Hou-Ning Hu MediaTek

- Wen-Hsiao Peng NYCU

- Yen-Yu Lin NYCU

Abstract

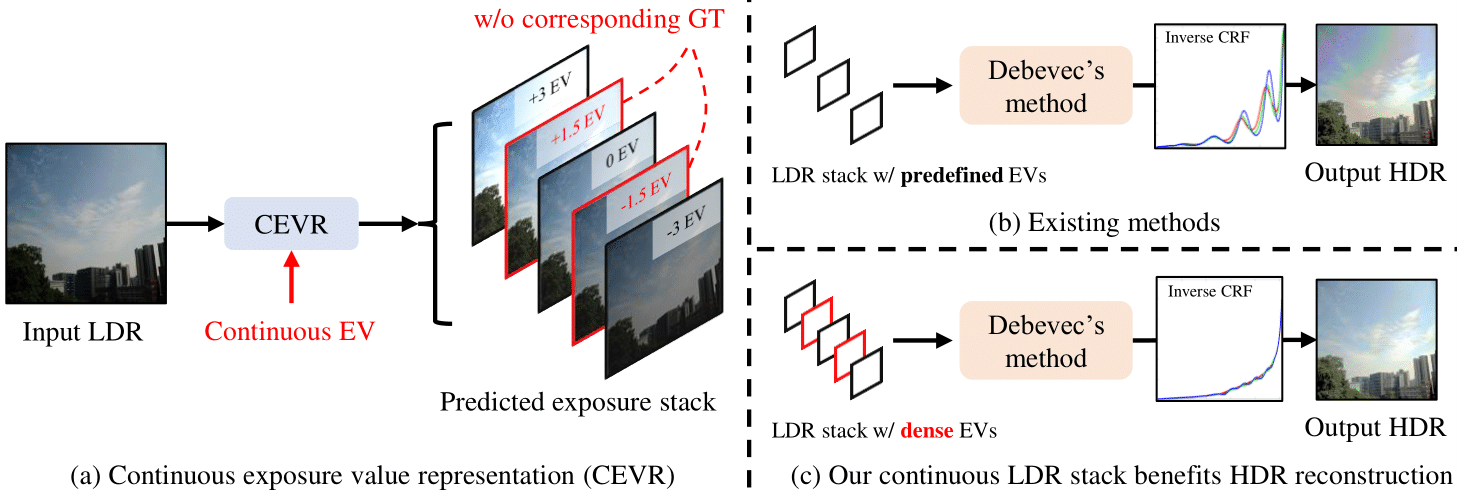

Deep learning is commonly used to produce impressive results in reconstructing HDR images from LDR images. LDR stack-based methods are used for single-image HDR reconstruction, generating an HDR image from a deep learning-generated LDR stack. However, current methods generate the LDR stack with predetermined exposure values (EVs), which may limit the quality of HDR reconstruction. To address this, we propose the continuous exposure value representation (CEVR) model, which uses an implicit function to generate LDR images with arbitrary EVs, including those unseen during training. Our flexible pproach generates a continuous stack with more images containing diverse EVs, significantly improving HDR reconstruction. We use a cycle training strategy to supervise the model in generating continuous EV LDR images without corresponding ground truths. Our CEVR model outperforms existing methods, as demonstrated by experimental results.

Video

Continuous LDR stack

Traditional indirect methods use LDR images with predefined exposure values to construct the stack. In contrast, our approach explores continuous exposure value information, constructing a denser stack that includes LDR images with previously unseen continuous exposure values. We call this denser stack the continuous LDR stack. Compared to existing indirect methods, our approach, the continuous LDR stack, has the ability to recover a more accurate inverse camera response function, therefore enhancing the quality of the final HDR result.

Continuous Exposure Value Representation (CEVR)

In order to generate the continuous LDR stack, we propose the CEVR model, which can generate LDR images with arbitrary exposure values. The generated LDR images with continuous exposure values are used to construct the continuous LDR stack.

Cycle training

For those exposure values without corresponding ground truth, how can CEVR understand the meaning of unseen exposure values during the training phase and generate a reasonable result? To address this challenge, we designed a novel training strategy called cycle training. This strategy involves two branches: one follows the conventional training approach, while the other branch divides the target exposure value into two incremental steps. These divided values are then processed through the CEVR model twice, and the sum of these two steps equals the target exposure value. Through this approach, our model learns explicit insights into exposure value variations and gets supervisory signals for exposure values without ground truth.

Evaluation results (3.3G)

We provide a ZIP file containing each compared method's results and the Matlab script for the evaluation of two datasets: the VDS dataset and the HDREye dataset. You can download the ZIP file and run the script to get the PSNR, HDR-VDP-2 score for the tone-mapped image, and HDR file.

Quantitative results

| Dataset | Method | PSNR | PSNR | HDR-VDP-2 | |||

|---|---|---|---|---|---|---|---|

| RH's TMO | KK's TMO | ||||||

| mean | std | mean | std | mean | std | ||

| VDS | DrTMO | 25.49 | 4.28 | 21.36 | 4.50 | 54.33 | 6.27 |

| Deep chain HDRi | 30.86 | 3.36 | 24.54 | 4.01 | 56.36 | 4.41 | |

| Deep recursive HDRI | 32.99 | 2.81 | 28.02 | 3.50 | 57.15 | 4.35 | |

| Santos et al. | 22.56 | 2.68 | 18.23 | 3.53 | 53.51 | 4.76 | |

| Liu et al. | 30.89 | 3.27 | 28.00 | 4.11 | 56.97 | 6.15 | |

| CEVR (Ours) | 34.67 |

3.50 | 30.04 |

4.45 | 59.00 |

5.78 | |

| HDREye | DrTMO | 23.68 | 3.27 | 19.97 | 4.11 | 46.67 | 5.81 |

| Deep chain HDRi | 25.77 | 2.44 | 22.62 | 3.39 | 49.80 | 5.97 | |

| Deep recursive HDRI | 26.28 | 2.70 | 24.62 | 2.90 | 52.63 | 4.84 | |

| Santos et al. | 19.89 | 2.46 | 19.00 | 3.06 | 49.97 | 5.44 | |

| Liu et al. | 26.25 | 3.08 | 24.67 | 3.54 | 50.33 | 6.67 | |

| CEVR (Ours) | 26.54 |

3.10 | 24.81 |

2.91 | 53.15 |

4.91 | |

Compared to other existing methods, our work demonstrates competitive performance.

Citation

Acknowledgements

This work was supported in part by National Science and Technology Council (NSTC) under grants 111-2628-E-A49-025-MY3, 112-2221-E-A49-090-MY3, 111-2634-F-002-023, 111-2634-F-006-012, 110-2221-E-A49-065-MY3 and 111-2634-F-A49-010. This work was funded in part by MediaTek.

The website template was borrowed from Zip-NeRF.